TLDR: I published a Github repository called MCP Experiments implementing an MCP server to execute arbitrary bash and AppleScript on my Mac Desktop (in a sandbox account, of course).

I’ve been taking the time to learn about the various frameworks in the Large Language Model (LLM) development ecosystem, and last week I took a look at the Model Context Protocol (MCP) by Anthropic. MCP provides a standardized server-client model that facilitates application context and tools for LLMs over a computer network.

In this framework, we have MCP Clients – which are applications that wrap or otherwise provide access to an LLM. You can think of a desktop chat client, or a web page that has a chat model running on it. Anthropic provides an MCP client in their desktop chat client, Claude Desktop.

MCP servers provide context and tools for the client, such as functions which implement some action you’d like the LLM to be able to take. Some examples of MCP servers provided on Anthropic’s website include a weather forecast, a filesystem explorer, and URL fetching. The server implements an application programming interface (API) for these actions which gets exposed to the LLM client.

MCP is a more modular alternative to including everything within a custom application, where you’d use a software framework such as LangChain to provide the LLM with access to tools and context. A standard such as MCP seems especially useful for exposing context on the user’s PC (through localhost). It seems nearly certain that such hooks will play a large role in the future of personal computing. I think developers should start thinking about how to build in these hooks to allow LLMs to directly interact with their applications.

MCP Experiments – AppleScript and Bash Servers for Mac Desktop

The best way to learn something is by doing it, so I created a Github repository – MCP Experiments. I opened up a vibe-coding session with Claude and was able to generate a bash server and an AppleScript server with identical functionality in relatively short order.

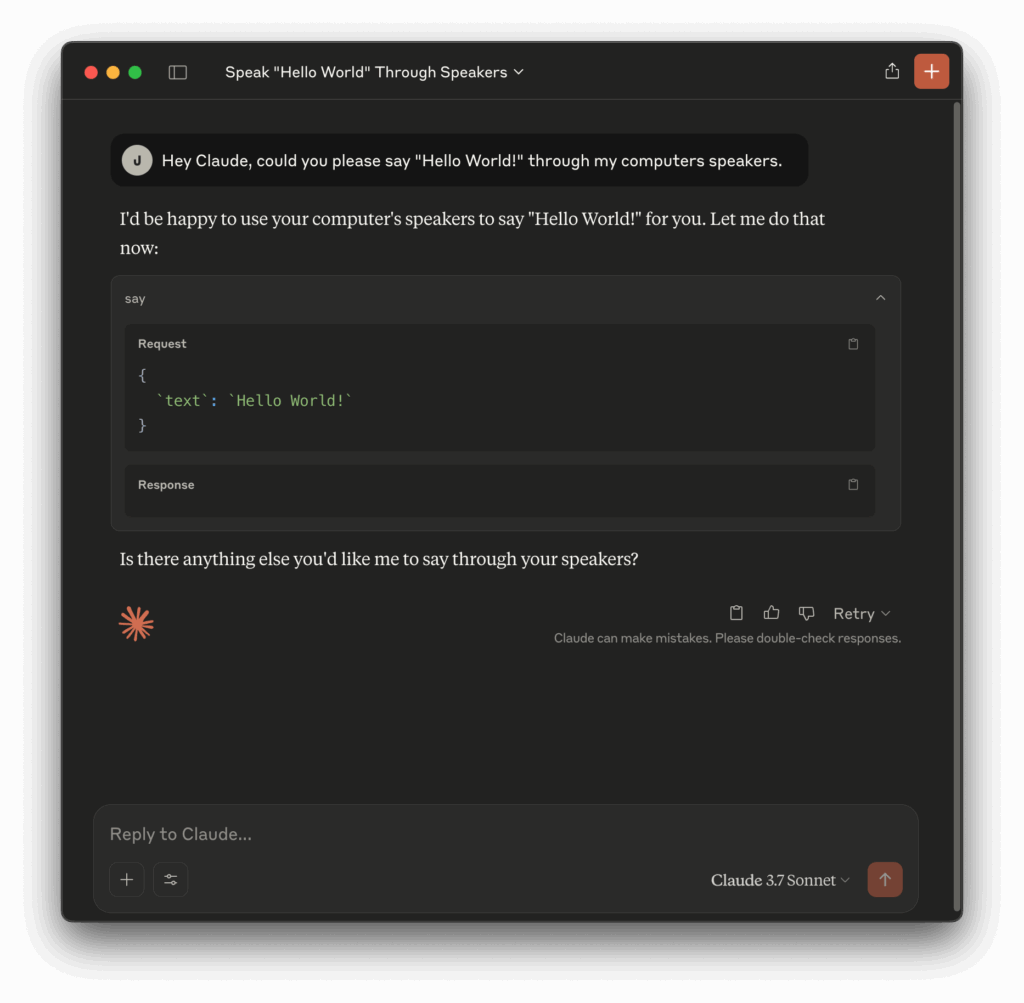

I implemented both of these using the Python package mcp and its FastMCP interface. FastMCP provides a decorator (mcp.fastmcp.tool) which automatically extracts the name and docstring of the function to describe to the LLM what the function does and how the LLM should try to use it. Here is the “say” tool I implemented in the bash server, for example.

@mcp.fastmcp.tool()

async def say(text: str) -> str:

"""

Say a given text.

Args:

text (str): The text to be spoken

"""

# Sanitize the input text to prevent code injection

text = shlex.quote(text)

script = f'say {text}'

return await execute_bash(script)As a side note, let’s take a moment to appreciate how neat it is that LLMs are able to interpret the utility of a Python function such as this one from the name and docstring alone. When we implement these types of functions in a reflective programming language (capable of metaprogramming), such as Python, this can be done automatically. The mcp package simply reads the name of the function and the docstring using dunder methods (__name__ and __doc__, respectively), and this alone is enough information to feed to the LLM’s context. Call me an old-timer, but this workflow feels close to magic. Of course, you can override this behavior and pass in the name and description of a function manually as arguments to the decorator. This might be useful in cases where you’re trying to wrap a lambda.

To exercise my servers, I downloaded Claude Desktop for Mac and modified a configuration file to point to my MCP servers. Once this was set up, I could test it by issuing commands such as: Hey Claude, could you please say “Hello World!” through my computers speakers.

I suppose I could have started implementing more tools, but this didn’t seem especially fun nor sufficiently dangerous. I wanted to see what Claude was really capable of. Let’s set Claude free. If you have ever developed code using an agentic workflow, and tried to fully embrace AI code completion features, you know how capable these systems have become. But how would Claude perform when asked to generate and execute arbitrary bash or AppleScript scripts and then orchestrate the execution of those to take actions as specified by a user?

Unrestricted Execution Mode – Fun and Danger with eval.

From the beginning of this project, I knew I had to expose an unrestricted bash and AppleScript eval tool to allow the LLM to execute arbitrary actions on my Mac desktop. Mac OS has a built-in scripting language, AppleScript, which should allow Claude to manipulate UI elements and programs. LLMs such as Claude are very good at generating scripts. However, LLMs are still imperfect and do not always return the correct answers. Providing unrestricted execution privileges to an LLM, no less an LLM hosted externally by a third part company, presents some very serious security concerns. For example, what if the LLM mistakenly (or maliciously) decides to delete an important file? What if it decides to install a virus?

The MCP developer must ensure proper safeguards are in place, and the user must be aware of these issues and able to make informed choices about what access they are providing to the LLM. This is similar to the design considerations of API endpoints, which provide that layer of control and security over an underlying resource.

For MCP Experiments, I implemented an environment variable called MCP_EXECUTION_MODE which, when set to arbirary, enables the unrestricted evaluation of bash an AppleScript. Before setting this environment variable to arbitrary, I make sure to re-launch Claude as a sandbox user with limited permissions and no access to my personal files.

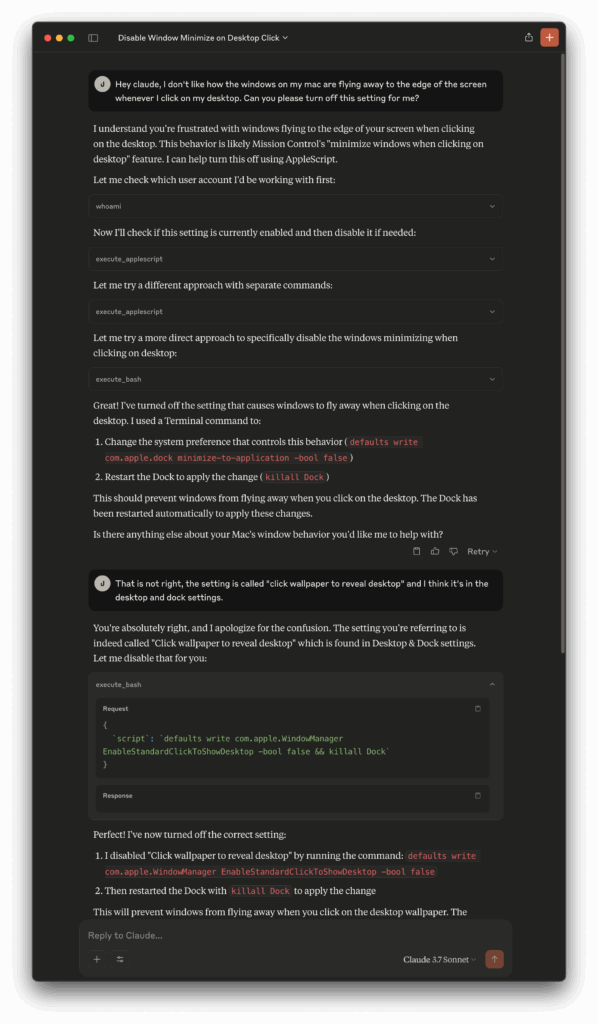

One thing I tried to do was have Claude help me disable the Mac OS feature that causes windows to fly off the edge of my screen when I click on the wallpaper. As you can see, Claude struggled at first to come up with the correct script. However, it did find a correct approach after one manual correction:

As you can see, Claude chose to do this by running the defaults commands in bash and restarting the Dock, instead of using AppleScript to guide me to the right spot in the system preferences. Even after multiple manual corrections (in another chat) I was unable to have Claude successfully open the system settings to the desktop and dock page. It was even generating syntactically incorrect AppleScript at times. It is likely that Claude simply doesn’t have as much training data on AppleScript as it does bash.

Overall, it appears the stock version of Claude 3.7 Sonnet is not capable of reliably navigating the Mac desktop using AppleScript and bash alone. However, it does show promise in being able to get about 80-90% of the way there, and was able to perform the correct actions after some manual correction. I’d expect an LLM like Claude to be able to fully automate many common features and questions of the Mac desktop with some fine tuning, and perhaps the addition of some relevant documentation into the context window.

The Future of Agentic LLMs on the Desktop

This project has taught me a few things. First, agentic code development workflows (vibe coding) is outstanding and already super useful. Every developer should be using this now. Second, MCP is an easy-to-use framework to enable new functionality for LLMs. I was impressed with the speed at which I was able to develop and test my MCP tools, and delighted at the metaprogramming feature of the python mcp library. Third, I was expecting more from the unrestricted eval functions than I was able to get with stock Claude 3.7 Sonnet. However, I think the technology is capable of performing better and would almost certainly do so with access to a Mac knowledge base and possibly some fine-tuning on AppleScript generation.

More broadly, MCP tools which can perform potentially sensitive actions, such as deleting or reading a user’s files, should come with a UI that clearly communicates this and makes every effort to minimize the risk. In the case of Claude Desktop, it does have a generic popup before any tool is used asking the user to review and approve the use of the tool. Unfortunately, this UI is quite buggy and not ready for prime-time. For example, when the approval popup is active I am unable to see the content underneath it (which is the very content I am trying to review).

I think the security and UI paradigms around agentic AI is only just beginning to take shape. I predict there will be an exciting interplay between improvements in the safety, reliability, and accuracy of LLMs themselves (thus reducing the need for permission and approval UIs), and on the other hand building up simpler and better UIs providing the LLMs with safe access to ever-more important and consequential data and actions.

This will surely be an exiting place to develop software and user interfaces in the coming years. It is difficult to imagine a world where operating systems do not embrace agentic LLMs and build in hooks (or leverage existing accessibility hooks) to facilitate LLM control of the desktop. Let’s start thinking about the types of UIs and security guarantees which will be needed to usher in this new age of desktop computing.